Artificial intelligence & robotics

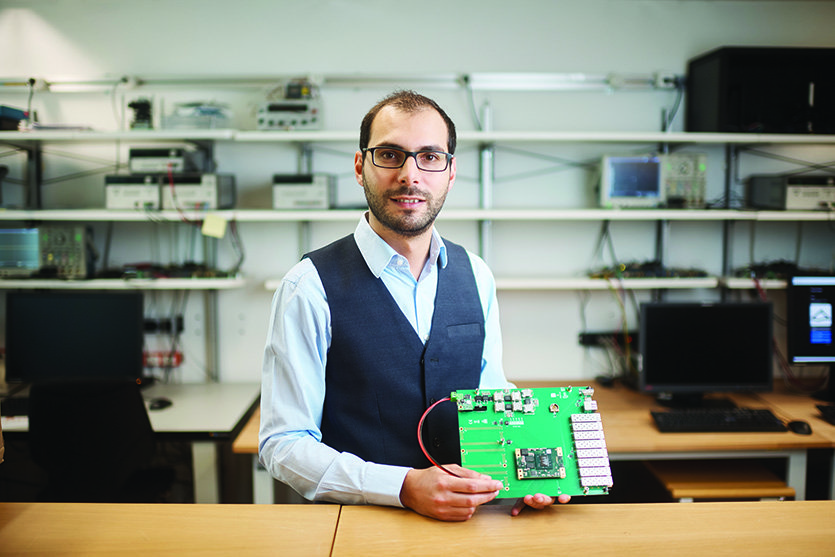

Manuel Le Gallo

He uses novel computer designs to make AI less power hungry.

China

Jinfeng Yi

His research makes AI applications safer and more reliable

China

Huichan Zhao

Perception for soft intelligent prostheses

Global

Atima Lui

She’s using technology to correct the cosmetics industry’s bias toward light skin.

Latin America

Renato Borges

Monitoring farms using IoT and AI to help farmers reduce costs and increase yields.